The Evolution of Integrated Circuits: From Idea to Today

Integrated circuits (ICs) are at the heart of nearly all electronic systems today—from computers and phones to cars and industrial machinery. Their invention ranks as one of the greatest technological advancements of the 20th and 21st centuries, having dramatically shrunk electronic systems while enhancing their speed, power, and efficiency.

Beginnings and Invention (1950s–1960s)

The story of ICs begins in the post–World War II era, as engineers struggled with the bulk of cumbersome vacuum tubes and discrete transistors. In 1958, Jack Kilby at Texas Instruments built the first working integrated circuit, utilizing germanium. A year later, Robert Noyce at Fairchild Semiconductor improved upon the concept with silicon and planar technology—enabling multiple transistors and components to be fabricated on a single chip. This development spawned today's semiconductor technology.

The Coming of Silicon and Commercialization (1970s)

Silicon emerged as the dominant material for IC manufacturing during the 1970s due to its electrical excellence and simplicity in production. The introduction of the 4004 by Intel in 1971—the first commercial microprocessor—was a landmark moment. The 4-bit processor, with thousands of transistors on a tiny chip, brought in the age of programmable logic and revolutionized computing.

Moore's Law and the VLSI Era (1980s–1990s)

As the technology evolved, the number of transistors on a single chip continued to double approximately every two years—a prediction famously known as Moore's Law. This enabled Very Large-Scale Integration (VLSI) of millions of transistors into a single chip. ICs became smaller, faster, and cheaper, and personal computers, mobile phones, and digital devices became ubiquitous.

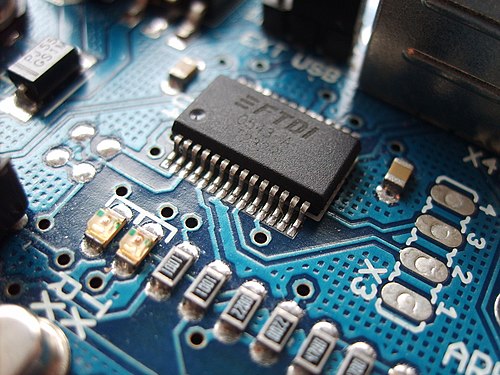

The System-on-Chip Revolution (2000s)

In the early 2000s, designers began consolidating multiple functions—CPU, GPU, memory, I/O—onto single chips, called System-on-Chip (SoC). These integrated solutions propelled smartphones, tablets, and embedded systems, delivering high performance at low power. Meanwhile, CMOS technology advances pushed transistor sizes below 100 nanometers, achieving even greater density.

Contemporary ICs and Advanced Nodes (2010s–2020s)

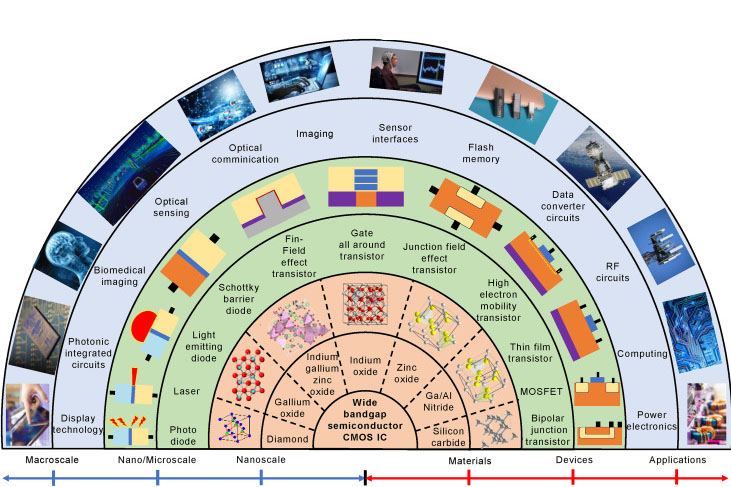

Integrated circuits are now manufactured at incredibly small levels—down to 3 nm processes—with tens of billions of transistors on a single chip. Some of the technologies that give chips more energy efficiency and performance include FinFET and gate-all-around (GAA) transistors. 3D ICs and chiplets, however, offer vertical stacking and modular integration capabilities, addressing the physical limitations of traditional scaling.

Beyond Moore's Law: AI, Quantum, and Neuromorphic Chips

As conventional scaling is hitting the wall, the R&D is shifting toward architecture-level innovation. Special-purpose ICs for AI (e.g., GPUs and TPUs), brain-inspired neuromorphic chips, and emerging quantum processors are all reinventing the future of computing. In the meantime, materials like gallium nitride (GaN) and silicon carbide (SiC) are being explored for high-power and high-frequency uses.

Summary Timeline: The Evolution of Integrated Circuits

|

Period |

Key Developments |

|

1950s–1960s |

Invention of the first IC by Jack Kilby (1958) using germanium; Robert Noyce improves it using silicon and planar technology (1959). |

|

1970s |

Silicon becomes the standard material; Intel releases the first commercial microprocessor, the 4004 (1971). |

|

1980s–1990s |

Moore’s Law drives exponential transistor growth; Very Large-Scale Integration (VLSI) enables millions of transistors per chip; personal electronics become widespread. |

|

2000s |

System-on-Chip (SoC) design consolidates multiple functions on a chip; CMOS advances push features below 100 nm. |

|

2010s–2020s |

Advanced nodes reach 3 nm; technologies like FinFET, GAA, 3D ICs, and chiplets enhance performance and density. |

|

Present and Beyond |

Shift toward AI, neuromorphic, and quantum chips; exploration of GaN and SiC for specialized, high-power applications. |

For more information, please check Stanford Electronics.

Conclusion

From a few transistors on a chip to over a hundred billion, the evolution of integrated circuits has transformed modern life. As we enter a new age of special-purpose computing and heterogeneous integration, ICs will remain the backbone of innovation—driving breakthroughs in everything from medicine and mobility to climate tech and space exploration.